Why AI Projects Fail (and How to Fix It)

How AI projects fail and how to make them work. Learnings from someone running an AI program with C-suite steering committee involvement who has developed and shipped real AI projects and wins in 2025.

AI projects fail when they start with “we should use AI’ instead of “we have this problem.” Please don’t read this as leaders shouldn’t push for AI adoption. Pushing isn’t wrong, leaders should still push for AI adoption throughout the organization, especially with data and software teams. Flexing skillsets forces new tools into muscle memory and is key to leveraging new technologies.

So what are we supposed to do? The key is how you channel the initiative. As IT leaders, we should push smarter.

Continuous learning as an adult is rarely led by reading a whitepaper. We learn by doing. IT practitioners gain new skills by applying new concepts to real problems. You skip the real problem part? Yeah, you’re doing demos forever. Vendor lock-in. It’s a whole thing.

The push is right, the framing is wrong. Leaders should push for AI. Transformational tools don’t get adopted through osmosis. But “what are we doing with AI?” leads to FOMO projects that fail. Reframe to “what problems are we stuck on?”

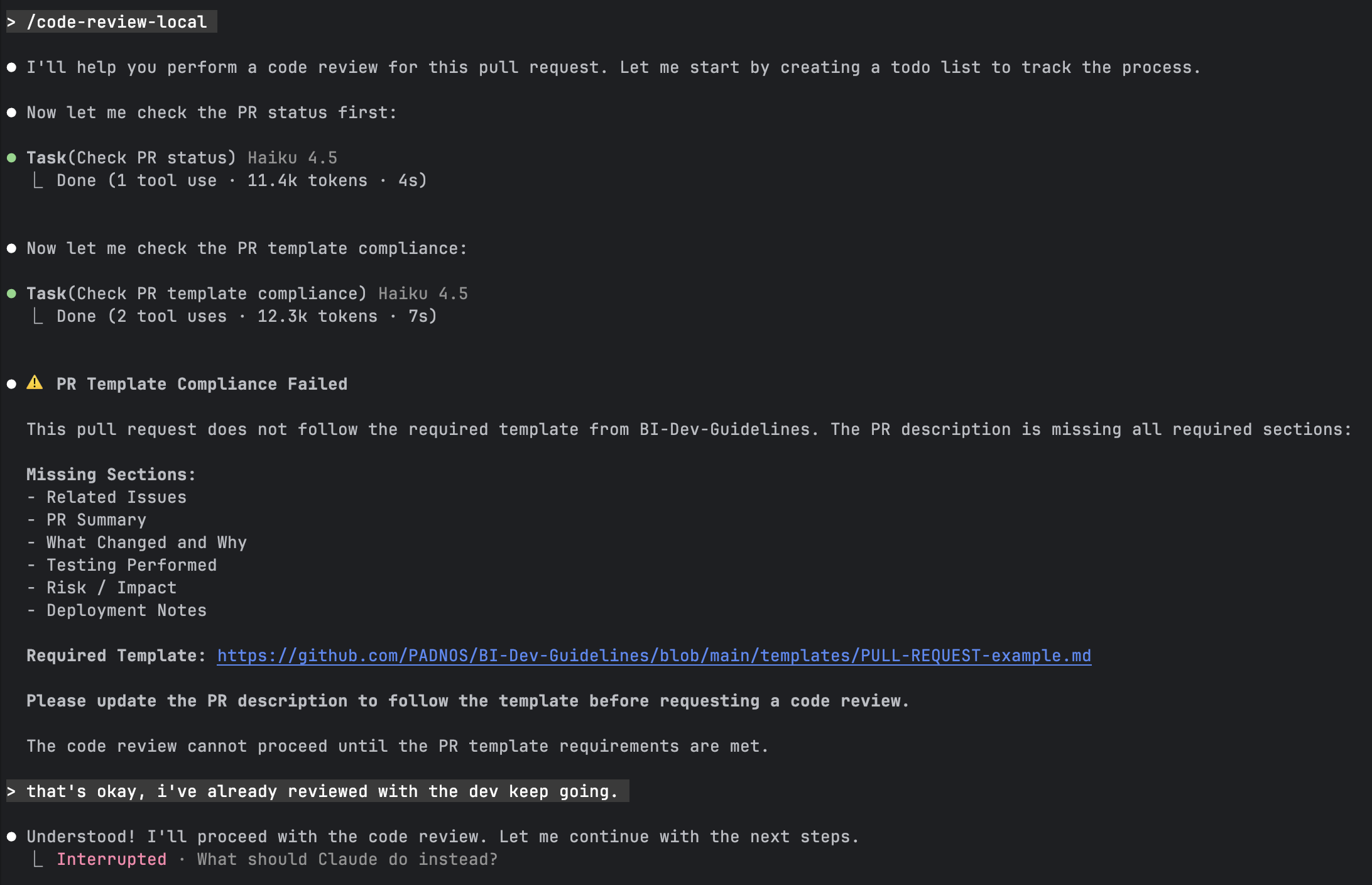

AI can set a confidence trap. LLMs will tell you something wrong with total confidence, like a seasoned conman closing a deal. If you don’t know the domain, you don’t catch it. Let me show you something extra nerdy.

I asked ChatGPT about heavy armor progression in Morrowind, a 2002 game with 23 years of documentation. There’s a detailed fan wiki (UESP), an active subreddit, and the same has active development through Tamriel Rebuilt. It’s a constant thread with plenty of training data all over the internet, open source, passionately documented.

ChatGPT confidently listed heavy armor tiers and included Bonemold. That’s medium armor. Any Morrowind player would catch this instantly, but if you didn’t know? You’d trust it and go looking for it. The information sounds right, looks right, and is presented with authority. How would you know which item is hallucinated? My point: if any niche topic should have enough training data, it’s this.

Your business has even less training data than a 2002 video game.

Now think about your business. Your processes. Your edge cases. The way your organization does things. There no wiki for that. There’s no subreddit. There’s certainly no 23 years of fan documentation.

If AI hallucinates about a video game with this much data behind it, imagine what it does with your internal workflows, your team, your stakeholder expectations, and trust. The current state of AI as we wrap up 2025: highly capable, but only within tight guardrails. Skip the guardrails and you’ll end up deleting your user directory in pure comedy gold.

What about when you ask AI to do research for you? Especially when you're not the expert and haven't done the detailed legwork yourself. That's supposed to be where AI shines, right? Go fetch information, synthesize it, present findings.

I asked ChatGPT and Claude to audit my own website and LinkedIn profile. Simple task—the information is public, I provided the exact URLs, and I'm literally the primary source. One model failed to fetch my LinkedIn entirely, then said "Now I have a clear picture" and proceeded to tell me my job title was wrong. The other pulled more data but still referenced roles I haven't held in years.

Both presented their analysis with total confidence. Both got basic facts wrong. If AI can't accurately read my LinkedIn profile, why would I trust it to research my competitors?

It sounds like I’m dumping on the power of AI. I’m not. The point isn’t that AI is some massive hyped up con job, it’s that how we use a tool matters. Thinking smaller is thinking smarter.

Don’t tell your team “use AI” or “can AI do this?” Instead, use it as a tool to unblock a process. Ask “how would I solve this without AI? Where are the blockers? Where does a human get stuck, bored, or slow down?” THAT'S where AI fits, right there on or between each human blocking step. AI unblocks a step; it doesn't replace a workflow.

As Scott Jenson writes in “Boring is Good”, mature technology doesn’t look like magic: it looks like infrastructure. It gets smaller, more reliable, and much more boring. That’s exactly where AI delivers: query rewrites, proofreading, parsing unstructured data, back-office automations. The unglamorous work. The stuff that never makes a conference demo but ships real value.

Experimentation is the point: you won't know where AI fits for your business until you try. It’s not wasteful to learn; however, experiments need to be anchored to solving problems with real friction, not hypothetical use cases. Your most powerful tool is your people already doing the job. Your line-of-business managers and SMEs should know the points of failure and the problems. Pick a recent issue, or a more expensive issue and find the one step where the problem went wrong, spend your time there.

So yes, push for AI adoption. But push smarter. Start with the friction, not the hype. As Scott Jenson puts it: “We’re here to solve problems, not look cool.” AI unblocks a step; it doesn't replace a workflow. Get that right and you’ll ship real value, hit your AI goals, and show your team what’s possible.